Winter Program in Machine Learning 2024

January 15-19, 2024

This winter program provides 5 days of concentrated study of topics in the mathematical foundations of Machine Learning at the graduate level.

The school will offer 2 courses on topics at the forefront of current research in Machine Learning. Lectures will be paired with discussion sections, and programing projects. During discussion sessions students will be divided in small groups and work on sets of problems related to the courses topics.

Organizers: Matias Delgadino, Joe Kileel, and Richard Tsai.

Support: NSF RTG 1840314, NSF DMS 220593

Lecturers (advanced courses):

Nicolas Garcia-Trillos (Univ. Madison, Wisconsin)

Joe Kileel (UT Austin)

Program: Classes will be held at PMA 8.136

Morning Session: Adversarial machine learning

Theory: 9:00-10:30

Exercises: 11:00-12:30 (Led by Axel Turnquist)

Afternoon Session: Methods for shape space analysis

Theory 13:30-15:00

Exercises 15:30-17:00 (Led by Julia Lindberg)

For up to date information, please join the discord:

https://discord.gg/RyPfawkFpN

Titles and Abstracts:

Mathematics of adversarial machine learning (Nicolas Garcia-Trillos)

Abstract:

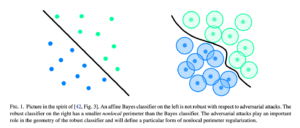

Adversarial machine learning is an area of modern machine learning whose main goal is to study and develop methods for the design of learning models that are robust to adversarial perturbations of data. It became a prominent research field in machine learning less than a decade ago, not long after neural networks became the state of the art technology for tackling image processing and natural language processing tasks, when it was noticed that neural network models, as well as other learning models, although highly effective at making accurate predictions on clean data, were quite sensitive to adversarial attacks.

This mini-course seeks an exploration of the mathematical underpinnings of this active and vibrant field. We will be particularly interested in exploring it from analytic and geometric perspectives and discussing connections with topics such as regularization theory, game theory, optimal transport, geometry, and distributionally robust optimization. The mini-course aims to present the topic of adversarial machine learning within the bigger objective of designing safe, secure, and trustworthy AI models.

Tensor Methods in Data Science (Joe Kileel)

Abstract:

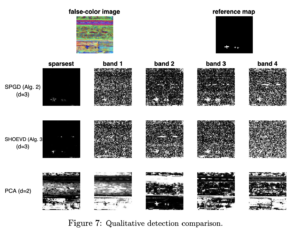

High-dimensional tensors are arising ubiquitously in data processing tasks from network science to statistics to PDE to signal processing. The extension of numerical linear algebra to its higher-order counterpart involves many twists and turns, and is an area of current research. These lectures will overview motivation for working with tensors, different tensor formats and numerical algorithms for computing them, applications, and some relevant notions from geometry, optimization and numerical analysis. Exercise sessions will include hands-on programming.